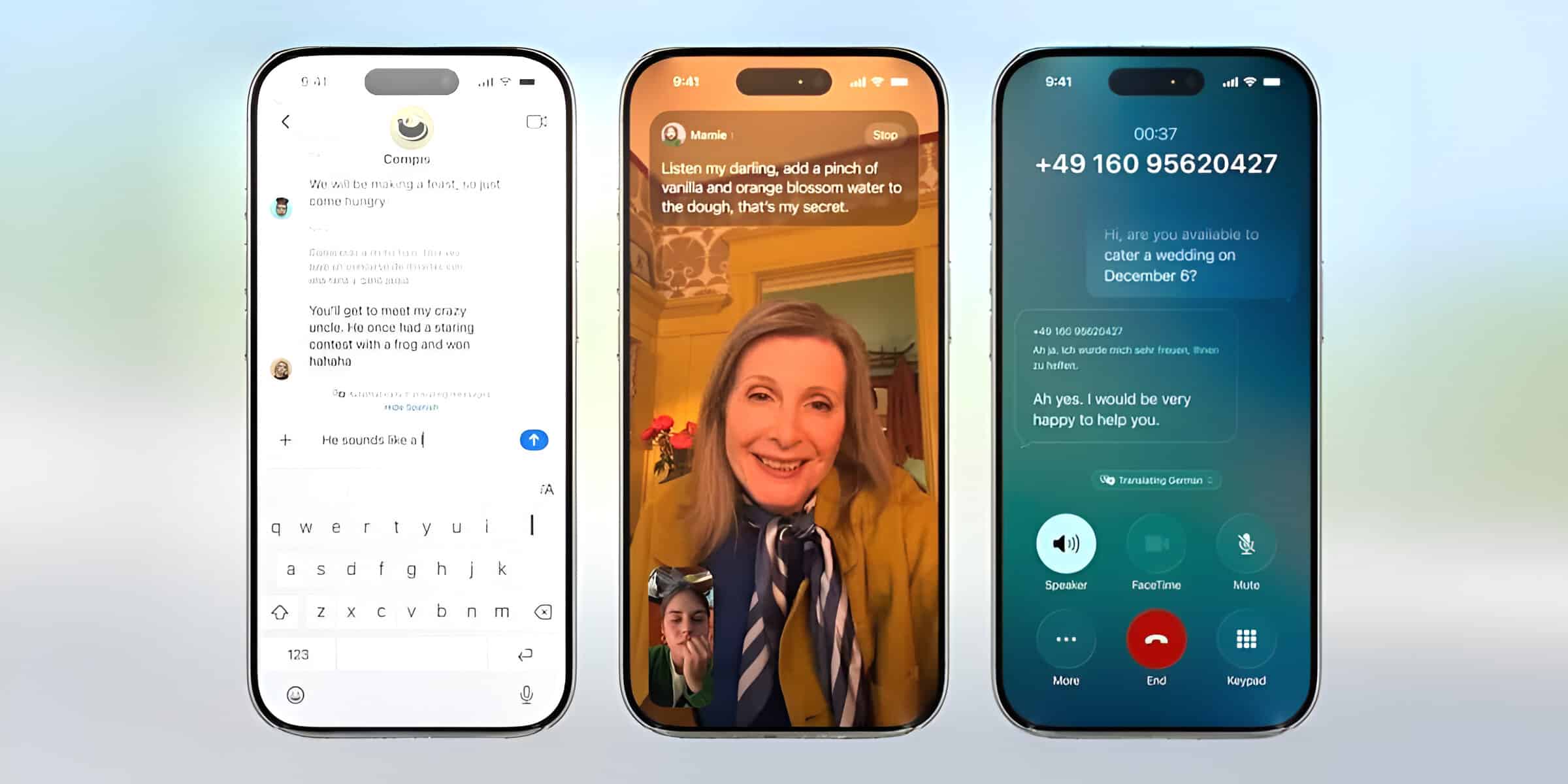

First, iOS 26 turns the iPhone into a live interpreter for calls, chats, and video sessions. Users see translations directly in Phone and Messages. Then FaceTime adds live captions in supported languages without leaving the app. Additionally, audio-only calls can translate spoken words aloud in real time .

On-Device Privacy and Performance

Moreover, Apple runs all translations on-device using its foundation models. As a result, the system works offline and avoids sending transcripts or voice data to the cloud. Users simply download language packs once and enjoy secure, low-latency translations .

Supported Devices and Languages

iOS 26 will arrive on iPhone 11 and newer, but live translation requires more recent models. Specifically, iPhone 15 Pro, 15 Pro Max, and all iPhone 16 devices support the feature. In Messages, the tool handles English (U.S., UK), French, German, Italian, Japanese, Korean, Portuguese, Spanish, and Chinese (Simplified). Meanwhile, Phone and FaceTime limit support to English (U.S., UK), French, German, Portuguese, and Spanish. Apple says it will add more languages by late 2025 .

Comparing to Rivals

However, rivals like Google and Samsung rely on cloud processing for live translation. They often support dozens of languages, but they expose data to external servers. In contrast, Apple’s on-device approach aims to protect user privacy and ensure fast responses within native apps .

A New Era of Communication

Ultimately, live translation in iOS 26 could reshape how people travel, study, and work across borders. Students might capture foreign text in Messages. Travelers could converse freely on phone calls. Business teams may hold video meetings in multiple tongues. As Apple refines this beta feature, the company cements its vision of seamless, private, and intelligent communication tools.